AI in Research Publishing

Human + Machine: Designing Empathetic

CX for Science & Research Publishing

Posted on: October 21st 2025

Research publishing should feel fast, clear, and human, treat every interaction as one continuous conversation that preserves context and answers high-anxiety questions in plain, policy-aware language. AI’s role in research publishing brings continuity: retrieve the right rules, infer intent, maintain the thread, and flag urgency so people can focus on ethics, edge cases, and reassurance.

In short, AI prepares; humans decide and connect.

The New Baseline: Fast, Clear, and Human

Science and research (S&R) publishing serves many audiences at once: authors, reviewers, editors, librarians, research administrators, and readers. Each touches different systems such as submission and peer review, production, access and entitlements, discovery. And each expects status clarity, plain language, and guidance grounded in policy and scope.

Empathy, in this setting, is practical. It means anticipating high-anxiety moments (“Where is my manuscript?”, “Why was this desk-rejected?”, “What does my funder require?”) and responding with specific, contextual answers rather than generic scripts. Empathy isn’t decoration at the end of a workflow; it’s precision at every handoff.

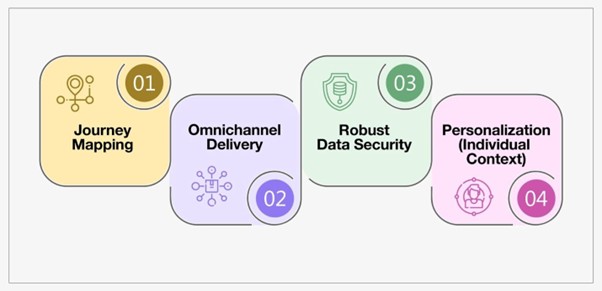

Authors working to deadlines want predictable timelines and direct answers. Reviewers, who donate scarce time, expect transparent processes and respectful communication. Editors are accountable for integrity as well as throughput. Librarians and OA administrators need entitlement clarity, accurate metadata, and policy-consistent routes to content. A sound CX foundation must bring together four pillars that reinforce each other (Exhibit 1).

Exhibit 1 – Empathetic CX at Scale: Four Design Anchors

Two problems compound in complex journeys: context gets lost, and prioritization breaks down. AI earns its place in science and research publishing when it fixes both.

Where AI Changes the Experience for Research Publishing Without Replacing Judgment

Assistants grounded in policy, scope, ethics, and style guidance can retrieve relevant passages, infer intent (“appeal,” “scope check,” “APC query,” “access issue”), and keep a single thread intact as a conversation moves from bot to agent to editor.

Sentiment and intent signals help operations triage real urgency rather than react to volume. These patterns are commonplace in modern contact-center operations—drafting replies, document-level retrieval, conversation audits, product and sentiment analytics—and translate directly to editorial and research-publishing work.

The result isn’t automation instead of people. It’s continuity at scale, so people can spend time where empathy and judgment matter most. The science and research ecosystem introduces requirements that sharpen how “good CX” is defined:

Integrity first. Systems should help surface questionable submissions early and point editors to relevant policy or ethics guidance. Empathy includes explaining outcomes clearly and outlining next steps when issues arise.

Time-to-publish as a design outcome. Assisted triage, reviewer operations, and workflow orchestration can shorten submit-to-decision and accept-to-publish cycles while keeping final decisions with humans.

Authors in multiple roles. A unified view that disambiguates identities and connects contributions across roles (author, reviewer, editor) enables precise, respectful communication. Status updates should reference the right person and record, not a generic account.

Evolving OA models. Guidance around transformative agreements, licensing structures, and funder mandates should be embedded into the journey so eligibility and choices are obvious, not buried in policy PDFs.

Scalable operations. Automation and platform-centric delivery help teams handle volume without diluting tone or quality. In this context, governance and security are features users can feel—clear labels for AI involvement, consistent escalation paths, and auditability that protects trust.

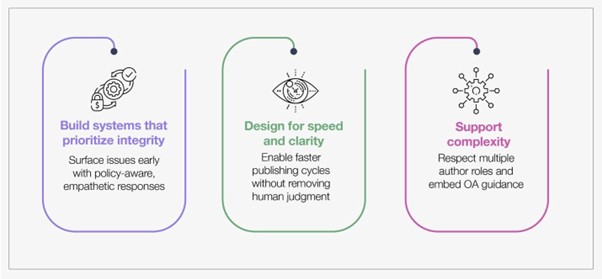

Exhibit 2 – Design Principles for Empathetic Research Publishing with AI

Humans own the calls with ethical and reputational weight—authorship disputes, conflicts of interest, appeals, nuanced edge cases, etc. They also offer reassurance: coaching first-time authors, de-stressing reviewers, and clarifying decisions. AI maintains the scaffolding that keeps empathy intact: retrieving exact rules, highlighting gaps and deadlines, rewriting for clarity and tone, and surfacing patterns like reviewer drop-off or recurring access failures.

Human Judgment, System Clarity, and Scalable Trust

Effective CX in publishing reflects broader patterns like tiered support, multi-channel access, and knowledge-driven scaling to manage complexity without losing clarity or tone. Trust is built when users understand how the system works—making transparency, governance, and human oversight essential.

Confidence starts early. Targeted invitations, clear author profiles, and a responsive helpdesk set the tone. Policy and scope questions get specific answers—with citations, not generalities—helping authors understand fit and alternatives upfront.

During submission, AI handles checks like scope alignment and metadata completeness. It supports peer review by suggesting suitable reviewers and drafting invitations. Integrity signals flag issues for human review, improving readiness without replacing judgment.

Post-acceptance, production workflows highlight missing elements—like funding notes or accessibility tags—so teams can verify quickly. Accessibility should be embedded from the start, with audits to meet established standards. Auto-generated alt text can help, but human review ensures quality.

After publication, structured metadata and conversational search help readers find and reuse content. If access is restricted, systems should explain why and offer alternatives—like open versions or repository links. Librarian helpdesks handle common queries and escalate as needed.

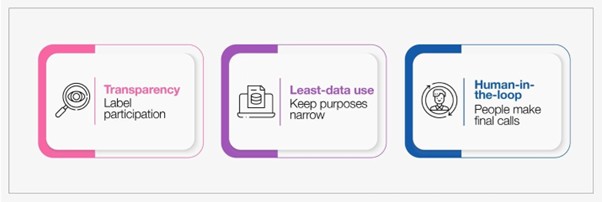

Exhibit 3 – How to Keep AI Accountable in Editorial Workflows

Least-data use is key because it minimizes risk and reinforces trust. By limiting AI access to only governed, relevant knowledge sources—and not sensitive manuscripts—it ensures privacy, keeps usage purpose-specific, and makes system behavior easier to audit and control.

Straive’s Perspective: From Reactive Fixes to Predictive Care

Programs mature when they move upstream from ticket closure to risk prevention. In research publishing, that means finding inflection points—reviewer invitation windows where responses decay, submission steps where authors stall, geographies or institutions with recurring access issues—and intervening before frustration spikes. A modern CX stance places agentic assistance alongside human oversight to optimize cost, personalization, and resilience without eroding editorial judgment. Multimodal aids—brief walkthroughs for complex figures, guidance for code or data reuse—help users progress quickly, in their context.

About the Author

Priya Roy is the Vice President of Delivery Leadership at Straive, driving operational excellence and transformative client solutions. She brings deep expertise in managing large-scale customer experience and technology programs.

Share with Friends:

We want to hear from you

Leave a Message

Our solutioning team is eager to know about your

challenge and how we can help.