Why High-Quality Data Annotation Is the Foundation of Accurate Media AI Models?

Posted on: February 13th 2026

Today’s digital economy is moving faster than ever, generating vast amounts of data across platforms and users. As AI adoption accelerates in 2026, machine learning is becoming central to the digital workflow from content creation and discovery to moderation and monetization.

Yet as adoption accelerates, many organizations find that AI systems struggle to deliver consistent results once deployed at scale.

The issue rarely lies in the algorithm’s sophistication. Gartner reports that nearly 30% of generative AI initiatives will be abandoned or fail to scale, mainly due to data quality, governance, and readiness gaps.

Training data contributes to these failures most visibly in Media AI, where models must work across text, audio, video, and user behavior, making accurate data annotation essential for scale and reliability.

Why AI Accuracy Breaks at Scale

AI systems often perform well in controlled tests but struggle to maintain accuracy in real-world deployments. Industry research continues to show that data readiness, not model capability, is the primary reason AI fails to scale.

Data Quality and Context Loss

AI models reflect the quality of their training data. Inaccurate labels, outdated content, and data shift degrade accuracy as data volume and variation increase.

Operational Complexity

As teams scale AI, they expose fragile processes that worked during pilots. Fragmented ownership and inconsistent data definitions introduce errors that models cannot correct on their own.

Inconsistent Annotation Standards

As AI scales across teams and vendors, labeling standards start to shift. Teams tag the same content differently over time, breaking consistency and weakening how models learn.

Human Review Does Not Scale

Manual review cannot keep pace with the growing volume of AI output. Teams must choose between speed and control, increasing either errors or operational cost.

Model and Algorithmic Limits

Models trained on clean, controlled datasets often struggle when they encounter edge cases in real-world operations. Overfitting and repeated exposure to synthetic data further weaken reliability over time.

Technical and Economic Constraints

Adding more data or computing does not automatically improve accuracy. After a certain point, gains flatten while infrastructure costs continue to rise, forcing teams to balance precision against scale.

What Is Data Annotation and Why Does It Matter

Data annotation is the process of labeling data, enabling machine learning models to learn accurately by assigning structure to raw data to consistently recognize patterns, context, and intent.

In Media AI, this includes tagging video scenes, transcribing audio, classifying text, and enriching content with metadata or sentiment signals. These labels shape how models interpret content and make decisions.

Without high-quality data annotation, AI systems lack a reliable learning foundation, leading to inconsistent outputs and poor performance at scale.

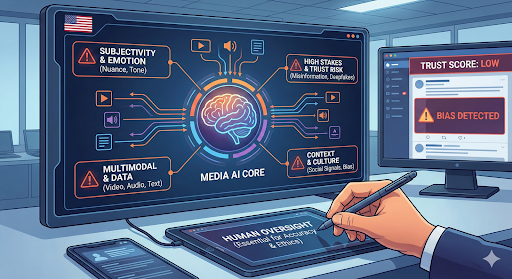

Why Media AI Is More Complex

Media AI operates across emotion, context, and intent in largely unstructured environments. With nearly 90% of data in video, audio, image, and text formats, achieving accuracy at scale is far more complex than in enterprise AI.

Subjectivity and Emotional Nuance

Media content relies on emotion and interpretation, not just facts. AI often struggles to capture tone, intent, and nuance, resulting in generic or misaligned outputs.

High Stakes and Trust Risk

Errors in media AI carry reputational and societal consequences. Misinformation, deepfakes, and biased outputs directly affect trust, credibility, and platform integrity.

Multimodal and Unstructured Data

Media AI must interpret text, audio, images, and video together. Aligning meaning across these formats introduces complexity that single-modal enterprise AI does not face.

Context and Cultural Sensitivity

Media AI models often fail to accurately interpret cultural signals and shifting social contexts, leading to inappropriate or incorrect outputs.

Probabilistic Behavior at Scale

Media AI relies on probability rather than rules, prioritizing patterns over substance. As scale increases, models risk sounding plausible while drifting from accuracy or intent.

Human Oversight Cannot Be Removed

Fully autonomous media AI remains unrealistic. Human-in-the-loop oversight is essential to handle ambiguity, ethical boundaries, and edge cases at scale.

How High-Quality Data Annotation Improves Media AI

High-quality data annotation enables Media AI to scale with accuracy. Straive delivers AI-assisted annotation and expert human-in-the-loop operations built for complex media environments.

AI-Assisted, Well-Governed Labeling

AI-assisted labeling accelerates annotation while governance ensures consistency. This combination improves model learning and delivers higher accuracy across large and evolving content volumes.

Expert Human-in-the-Loop Validation

Human review corrects edge cases, ambiguity, and bias that automation misses. Expert oversight reduces false positives and strengthens decision quality in high-risk media workflows.

Policy-Aligned Moderation Workflows

Annotation aligned to platform policies and standards enables consistent enforcement. This improves compliance decisions and reduces variability across regions, teams, and content types.

Multimodal Annotation Across Media Formats

Annotating text, images, audio, and video together creates richer metadata. This strengthens content discovery, searchability, and downstream personalization accuracy.

Clean, Validated Training Data for Generative AI

Validated annotation pipelines produce dependable training data for generative models. This reduces risk, improves output quality, and supports safer GenAI deployment at scale.

Why Human-in-the-Loop Annotation Is Essential

Automation enables scale, but Media AI operates in environments defined by ambiguity, judgment, and risk. Straive’s human-in-the-loop model positions human oversight as a core operational layer, not a fallback.

Manual + Straive AI/LLM Foundry

Early-stage Media AI relies on human expertise supported by AI-assisted tools. This approach ensures quality, context, and policy alignment while models learn from real-world data.

AI Solution + Straive Expert-in-Loop Ops

As AI matures, expert reviewers remain embedded in workflows to handle ambiguity and edge cases. Human oversight corrects errors, reduces bias, and guides model improvement.

Autonomous AI

Automation increases only after systems demonstrate consistent accuracy. Even at this stage, humans monitor outcomes and intervene when models encounter unfamiliar or high-risk scenarios.

Continuous AI Operationalization

Media environments constantly change, requiring ongoing validation and retraining. Human feedback loops ensure annotation quality and model performance remain reliable over time.

In production environments, data annotation functions as infrastructure rather than a one-time task. When annotation quality breaks down, accuracy, trust, and scalability fail together, regardless of model sophistication.

Annotation-Driven Outcomes in Media AI

At Straive, we embed high-quality data annotation and expert human oversight directly into Media AI workflows, helping platforms improve accuracy, speed, and trust at scale.

By combining AI-assisted metadata annotation with human-in-the-loop validation, we helped a large entertainment discovery platform achieve 99.5%+ metadata accuracy across 5,500+ channels, significantly improving content discovery and catalog consistency at scale.

In a broadcast advertising environment, advertiser clustering based on behavioral data replaced demographic segmentation, enabling targeted product creation and cross-sell strategies that delivered a 5% increase in share of wallet and $2.3 million in incremental revenue.

For long-form broadcast content, we delivered structured annotation and expert-led sentiment analysis to align emotional cues with viewer behavior, helping identify programming optimizations that could yield a 12% potential uplift in ratings.

How Straive Supports Accurate Media AI

With Media AI scaling up in 2026, data annotation is now an operational priority. Straive leads this shift by integrating AI-assisted and expert human annotation directly into global media workflows.

Straive, with 20,000+ specialists in 30+ countries, provides high-volume, multimodal annotation (text, audio, image, video). This model delivers 99.5%+ data accuracy across 5,500+ channels, 50% faster turnaround, and 15–20% better model accuracy in media environments.

Accurate sentiment and intent labeling within these workflows enables more relevant and personalized user experiences at scale. By combining data analytics, annotation governance, and expert judgment, media organizations can move from experimentation to production-ready Media AI, scaling accuracy, trust, and performance despite growing content complexity.

Anubhav Bairathi | Client Partner, Analytics & AI and an IIT Bombay alumnus with 18 years of experience, Anubhav is a specialist in Operationalizing AI at scale. He bridges the gap between complex data challenges and business value for the Media and Financial Services sectors. A specialist in Predictive Analytics and Data Management, he is a trusted leader in building high-performing teams and scalable architectures that power the modern AI enterprise.